A DevOps constraint that many teams struggle with is providing self-service application environments while keeping them consistent. The two goals, much like the two opposing forces of Development and Operations teams, conflict with each other. Self-service means anyone authorized can utilize or provision an environment, and consistent means environments must be the same (to ensure apps tested in lower environments will function in prod).

I often see the two principles manifest with an inverse relationship. Either environments are highly self-service, but there is no consistency between them, or they are incredibly consistent and lacking self-service efficiency or capability. Environments should be both highly self-serviceable and consistent. So how do we do this? Get rid of static staging environments and deploy every merge to prod. Here are some reasons why:

- Maintain parity with prod: Once a PR is complete, it goes out the door. There’s no room for discrepencies between many lower, static enviroments and prod.

- No waiting for envs: A new environment is created for you and your app deployed as soon as you create the PR.

- Everyone owns their merges: Once you merge, it’s very clear your work is done. It’s also clear who did what and where!

- Prove deployment end to end: The whole thing from start to finish is deployed with your new changes, proving your changes work with what’s already out there.

Here’s an example.

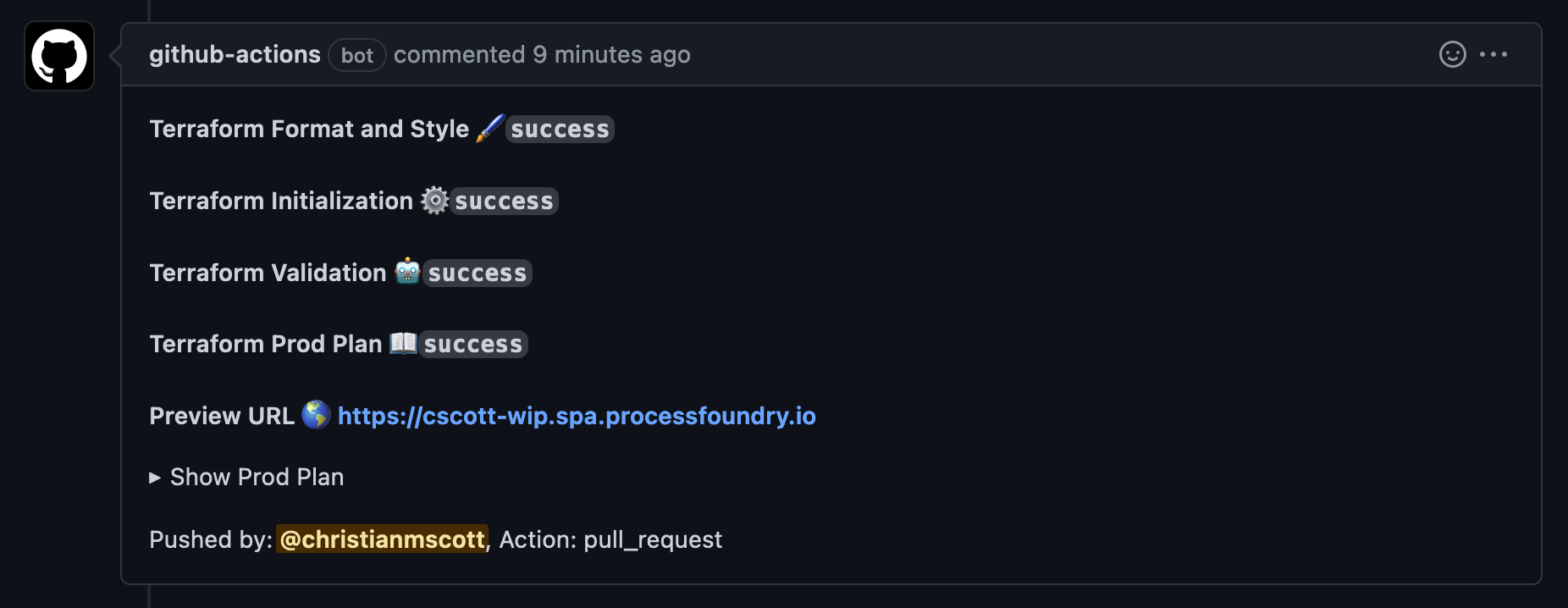

I’m deploying a single-page app to a storage account in Azure using terraform. Here’s a PR comment with some cool stuff:

The workflow for the PR created an environment based on my branch name and deployed the theoretical app within. The pipeline ran in just over a minute and now I know exactly how my app is going to function when I merge the pull request and prod is deployed. Here’s what the terraform looks like:

TF Workspace Archetype

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

}

}

backend "azurerm" {

resource_group_name = "rg-spa-init-eus"

storage_account_name = "saspastate"

container_name = "tfstate"

use_oidc = true

subscription_id = "***"

tenant_id = "***"

}

}

# Configure the Microsoft Azure Provider

provider "azurerm" {

use_oidc = true

features {}

}

module "infrastructure" {

source = "../infrastructure"

service = var.service

environment = var.environment

region = var.region

domain = var.domain

}

Note: You can find the code for this experiment here.

By the way, I write one of these every few weeks or so. Sign-up with your email here to receive the latest, as soon as it’s posted!

Instead of using static environments within github actions, this same terraform workspace is called for each environment. The environment name is set by the GITHUB_HEAD_REF variable (the name of our branch to be merged). This value is used to point terraform at the proper state file and set the environment variable for the module. The module contains all of our resources, so each environment is identical:

resource "azurerm_resource_group" "main" {

name = "rg-${var.service}-${var.environment}-${var.region.suffix}"

location = var.region.name

tags = {

app = "${var.service}"

environment = "${var.environment}"

created-by = "terraform"

}

}

resource "azurerm_storage_account" "main" {

name = format("%s", lower(replace("sa${var.service}${var.environment}", "/[[:^alnum:]]/", "")))

resource_group_name = azurerm_resource_group.main.name

location = azurerm_resource_group.main.location

account_tier = "Standard"

account_replication_type = "LRS"

network_rules {

default_action = "Allow"

}

static_website {

index_document = "index.html"

}

tags = {

app = "${var.service}"

environment = "${var.environment}"

created-by = "terraform"

}

}

resource "azurerm_storage_container" "main" {

name = "${var.service}${var.environment}"

storage_account_name = azurerm_storage_account.main.name

container_access_type = "container"

}

data "azurerm_resource_group" "init" {

name = "rg-${var.service}-init-${var.region.suffix}"

}

resource "azurerm_dns_cname_record" "main" {

name = var.environment

zone_name = "${var.service}.${var.domain}"

resource_group_name = data.azurerm_resource_group.init.name

ttl = 300

record = azurerm_storage_account.main.primary_web_host

tags = {

app = "${var.service}"

environment = "${var.environment}"

created-by = "terraform"

}

}

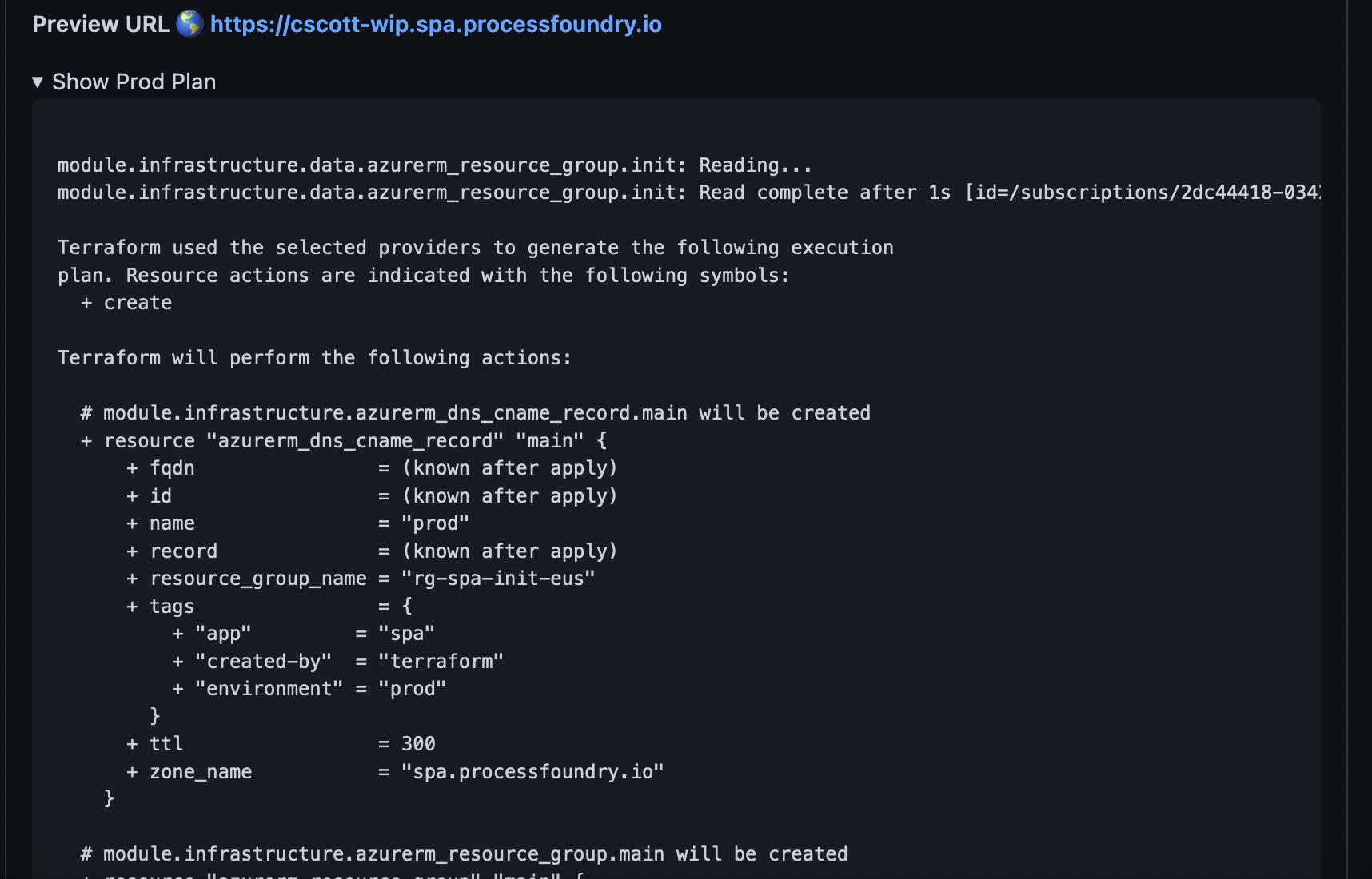

When the PR is merged, the same actions are run, except against the “prod” environment. This apply will commit the actions described the “Prod Plan” you can see in the PR comment. So this plan:

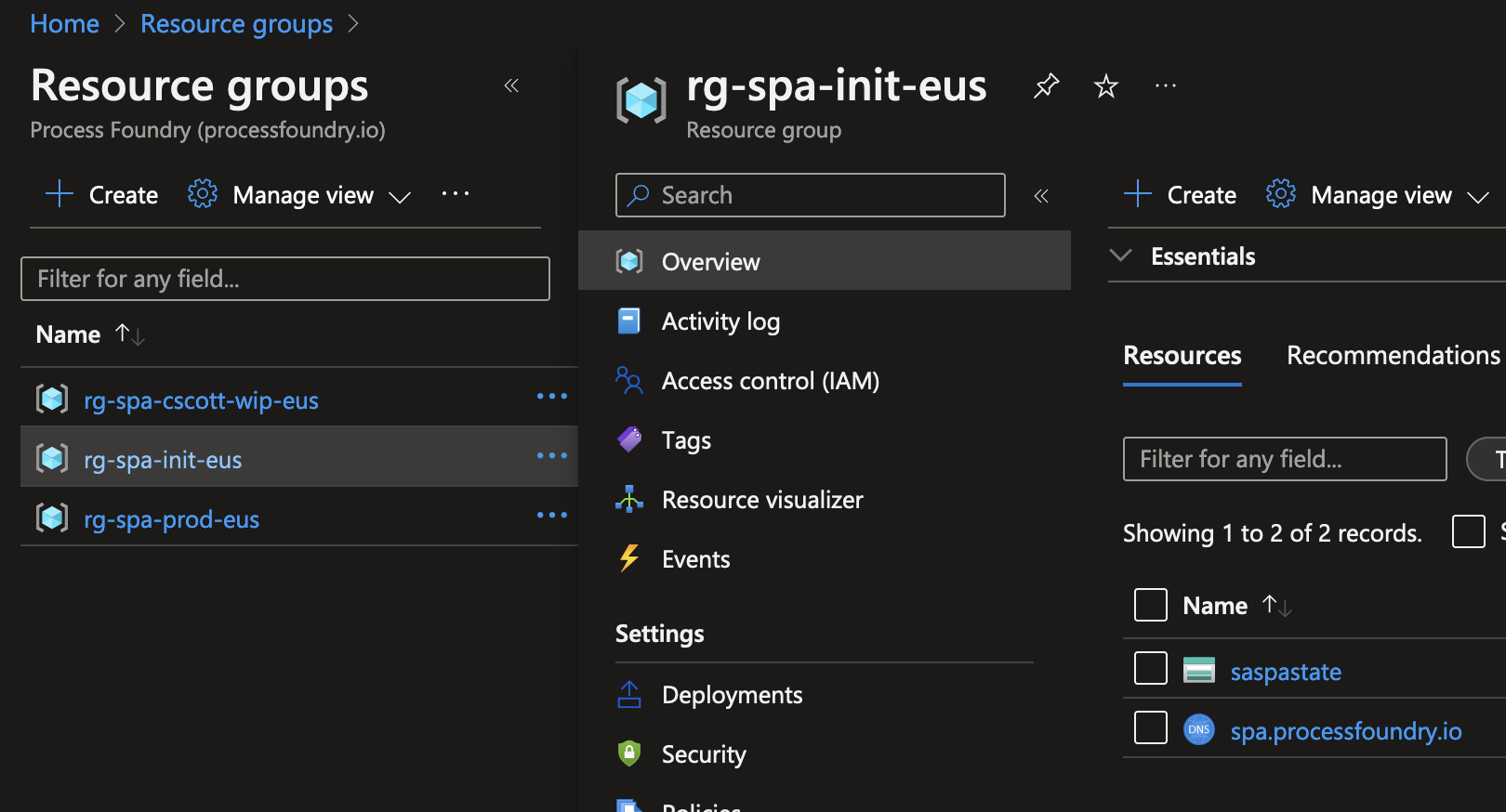

Becomes this:

Consistent. Self-Service. I like it.

Why do I need this?

Using Terraform Modules in this way is most useful for environments containing many small, atomic pieces. For example, a single-page app that lives in a storage account, with an API running on App Service in Azure. Since there are many little pieces to manage here (app service plan, app service, storage account, container registry/repository, web app load balancer/apim, and dns), it’s nice to modularize them for reuse.

Where doesn’t this make sense?

Terraform goes out the window for this as soon as you’re using an orchestrator or native platform features for environments. Microservices on kubernetes or nomad, for example. Here you are likely to be using helm charts, argo cd, nomad job specs, or waypoint to create dynamic environments for merge requests and prod deploys. You’d likely still use Terraform or another infrastructure as code tool to bootstrap and manage the underlying infrastructure supporting the orchestrator, following GitOps principles. Effectively, you can build your own Internal Developer Platform (IDP) this way.

Platform tools like Vercel, Netlify, Heroku, etc. have built-in functionality for merge/pull request deploys with your favorite git platform. Things are really easy here, since there is no backend infrastructure for you to manage. So if you’re using those tools, you’re all set. I’ll assume you’re just here for fun..

How to do it.

Take inventory of all the pieces your application environment needs. We’ll use our single-page app from earlier as an example. To simplify, we’ll deploy a storage account and DNS records:

azurerm_resource_groupazurerm_storage_accountazurerm_storage_containerazurerm_dns_zoneazurerm_dns_a_record

To deploy what we want, we use conditionals to run certain terraform tasks when the pipeline is triggered for a pull request run, and others when there is a push to main (PR merge). When these terraform actions run they point at the archetypal environment workspace. The workspace archetype includes default variable values in variables.tf that apply to all environments. The rest we fill in during runs of the GitHub Actions workflow, when calling the archetype. Speaking of, here’s mine:

name: "Single-page App Deployment with Dynamic Environments"

on:

push:

branches:

- main

pull_request:

jobs:

tf-ci:

name: "Terraform PR Validation"

runs-on: ubuntu-latest

environment: azure

env:

ARM_CLIENT_ID: ${{ secrets.ARM_CLIENT_ID }}

ARM_SUBSCRIPTION_ID: ${{ secrets.ARM_SUBSCRIPTION_ID }}

ARM_TENANT_ID: ${{ secrets.ARM_TENANT_ID }}

TF_LOG: INFO

permissions:

pull-requests: write

id-token: write

contents: read

defaults:

run:

working-directory: ./tf/envdna

steps:

- name: Checkout

uses: actions/checkout@v3

- name: Setup Terraform

uses: hashicorp/setup-terraform@v1

with:

terraform_version: 1.3.7

- name: Terraform Format

id: fmt

run: terraform fmt -check

- name: Terraform Init

id: init

run: terraform init -backend-config='key=prod.tfstate'

- name: Terraform Validate

id: validate

run: terraform validate -no-color

- name: Terraform Plan

id: plan

if: github.event_name == 'pull_request'

run: terraform plan -no-color -input=false -var 'environment=prod'

continue-on-error: true

- name: Terraform Init

id: initpr

if: github.event_name == 'pull_request'

run: terraform init -backend-config='key=${{ github.head_ref }}.tfstate' -reconfigure

- name: Terraform Validate

id: validatepr

if: github.event_name == 'pull_request'

run: terraform validate -no-color

- name: Terraform Review Apply

id: apply

if: github.event_name == 'pull_request'

run: terraform apply -auto-approve -no-color -input=false -var 'environment=${{ github.head_ref }}'

continue-on-error: true

- name: Terraform Output

id: tfout

if: github.event_name == 'pull_request'

run: terraform output -raw preview | sed 's/.$//'

- name: Update Pull Request

uses: actions/github-script@v6

if: github.event_name == 'pull_request'

env:

PLAN: ${{ steps.plan.outputs.stdout }}

with:

github-token: ${{ secrets.GITHUB_TOKEN }}

script: |

const output = `#### Terraform Format and Style 🖌\`${{ steps.fmt.outcome }}\`

#### Terraform Initialization ⚙️\`${{ steps.init.outcome }}\`

#### Terraform Validation 🤖\`${{ steps.validate.outcome }}\`

#### Terraform Prod Plan 📖\`${{ steps.plan.outcome }}\`

#### Preview URL 🌎 [https://${{ steps.tfout.outputs.stdout }}](https://${{ steps.tfout.outputs.stdout }})

<details><summary>Show Prod Plan</summary>\n

\`\`\`\n

${process.env.PLAN}

\`\`\`

</details>

Pushed by: @${{ github.actor }}, Action: ${{ github.event_name }}`;

github.rest.issues.createComment({

issue_number: context.issue.number,

owner: context.repo.owner,

repo: context.repo.repo,

body: output

})

- name: Terraform Plan Status

if: steps.plan.outcome == 'failure'

run: exit 1

- name: Terraform Apply

if: github.ref == 'refs/heads/main' && github.event_name == 'push'

run: terraform apply -auto-approve -input=false -var 'environment=prod'

The flow.

Put it all together and you have a nice workflow:

- Open a PR on your repo with your latest app changes. You get the plan output for prod and terraform creates an environment with your proposed changes.

- Hit the preview URL and drive around to validate your new changes. Send this preview link to others if you like!

- Merge the PR and prod deploys with your changes.

Some other things.

Of course, to set this up, there are a couple of other things you’d need to do:

- Create a service principal in your Azure AD directory and assign it the contributor/owner role on your Azure subscription. You can setup federated credentials for passwordless auth using the guide here.

- Run a Terraform apply locally for the “init” environment, which creates any shared resources you may have (like the dns zone, in our example). After running the initial apply, migrate your local state file into the azure storage account this creates by running a

terraform init. - Add any steps for building, publishing, and deploying your app code to the GitHub Actions workflow. It’s likely you’ll need some other terraform resources as well, depending on your setup.

- Here is the source code for this experiment.

If you need help with DevOps and software delivery, reach out.